Ensure High Availability for SQL Server on Amazon Web Services

Database and system administrators have long had a wide range of options for ensuring that mission-critical database applications remain highly availability. Public cloud infrastructures, like those provided by Amazon Web Services, offer their own, additional high availability options backed by service level agreements. But configurations that work well in a private cloud might not be possible in the public cloud. Poor choices in the AWS services used and/or how these are configured can cause failover provisions to fail when actually needed. This article outlines the various options available for ensuring High Availability for SQL Server in the AWS cloud.

Options

For database applications, AWS gives administrators two basic choices. Each of which has different high availability (HA) and disaster recovery (DR) provisions: Amazon Relational Database Service (RDS) and Amazon Elastic Compute Cloud (EC2).

RDS

RDS is a fully managed service suitable for mission-critical applications. It offers a choice of six different database engines, but its support for SQL Server is not as robust as it is for other choices like Amazon Aurora, My SQL and MariaDB. Here are some of the common concerns administrators have about using RDS for mission-critical SQL Server applications:

- Only a single mirrored standby instance is supported,

- Agent Jobs are not mirrored and, therefore, must be created separately in the standby instance,

- Failures caused by the database application software are not detected,

- Performance-optimized in-memory database instances are not supported,

- Depending on the Availability Zone assignments (over which the customer has no control) performance can be adversely affected,

- SQL Server’s more expensive Enterprise Edition is needed for the data mirroring feature available only with Always On Availability Groups.

Elastic Compute Cloud

The other basic choice is the Elastic Compute Cloud with its substantially greater capabilities. This makes it the preferred choice when HA and DR are of paramount importance. A major advantage of EC2 is the complete control it gives admins over the configuration, and that presents admins with some additional choices.

Picking The Operating System

Perhaps the most consequential choice is which operating system to use: Windows or Linux. Windows Server Failover Clustering is a powerful, proven and popular capability that comes standard with Windows. But WSFC requires shared storage, and that is not available in EC2. Because Multi-AZ, and even Multi-Region, configurations are required for robust HA/DR protection, separate commercial or custom software is needed to replicate data across the cluster of server instances. Microsoft’s Storage Spaces Direct (S2D) is not an option here, as it does not support configurations that span Availability Zones.

The need for additional HA/DR provisions is even greater for Linux, which lacks a fundamental clustering capability like WSFC. Linux gives admins two equally bad choices for high availability: Either pay more for the more expensive Enterprise Edition of SQL Server to implement Always On Availability Groups; or struggle to make complex do-it-yourself HA Linux configurations using open source software work well.

Comparisons

Both of these choices undermine the cost-saving rationale for using open source software on commodity hardware in public cloud services. SQL Server for Linux is only available for the more recent (and more expensive) versions, beginning in 2017. And the DIY HA alternative can be prohibitively expensive for most organizations. Indeed, making Distributed Replicated Block Device, Corosync, Pacemaker and, optionally, other open source software work as desired at the application-level under all possible failure scenarios can be extraordinarily difficult. Which is why only very large organizations have the wherewithal (skill set and staffing) needed to even consider taking on the task.

Owing to the difficulty involved implementing mission-critical HA/DR provisions for Linux, AWS recommends using a combination of Elastic Load Balancing and Auto Scaling to improve availability. But these services have their own limitations that are similar to those in the managed Relational Database Service.

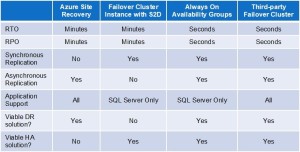

All of this explains why admins are increasingly choosing to use failover clustering solutions designed specifically for ensuring HA and DR protections in a cloud environment.

Failover Clustering Purpose-Built for the Cloud

The growing popularity of private, public and hybrid clouds has led to the advent of failover clustering solutions purpose-built for a cloud environment. These HA/DR solutions are implemented entirely in software that creates, as implied by the name, a cluster of servers and storage with automatic failover to assure high availability at the application level.

Most of these solutions provide a complete HA/DR solution that includes a combination of real-time block-level data replication, continuous application monitoring and configurable failover/failback recovery policies. Some of the more sophisticated solutions also offer advanced capabilities like support for Always on Failover Cluster Instances in the less expensive Standard Edition of SQL Server for both Windows and Linux. They also offer WAN optimization to maximize multi-region performance. There’s also manual switchover of primary and secondary server assignments to facilitate planned maintenance. Including the ability to perform regular backups without disruption to the application.

Most failover clustering software is application-agnostic, enabling organizations to have a single, universal HA/DR solution. This same capability also affords protection for the entire SQL Server application. And that includes the database, logons, agent jobs, etc., all in an integrated fashion. Although these solutions are generally also storage-agnostic, enabling them to work with shared storage area networks, shared-nothing SANless failover clustering is usually preferred for its ability to eliminate potential single points of failure.

Support for Always On Failover Cluster Instances (FCIs) in the less expensive Standard Edition of SQL Server, with no compromises to availability or performance, is a major advantage. In a Windows environment, most failover clustering software supports FCIs by leveraging the built-in WSFC feature. It makes the implementation quite straightforward for both database and system administrators. Linux is becoming increasingly popular for SQL Server and many other enterprise applications. Some failover clustering solutions now make implementing HA/DR provisions just as easy as it is for Windows by offering application-specific integration.

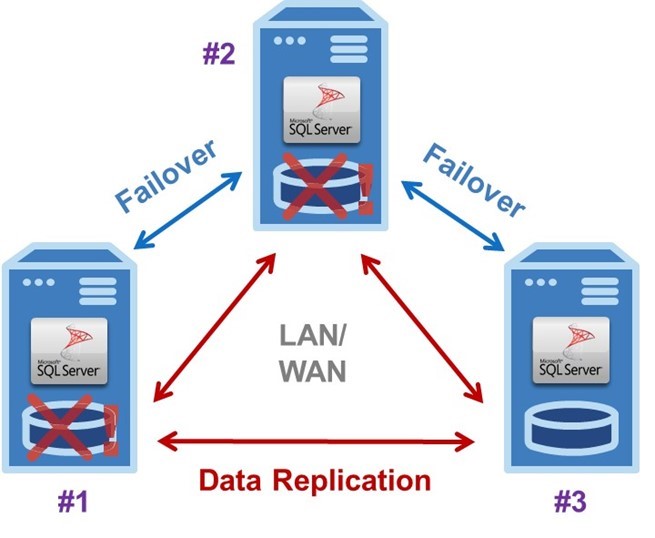

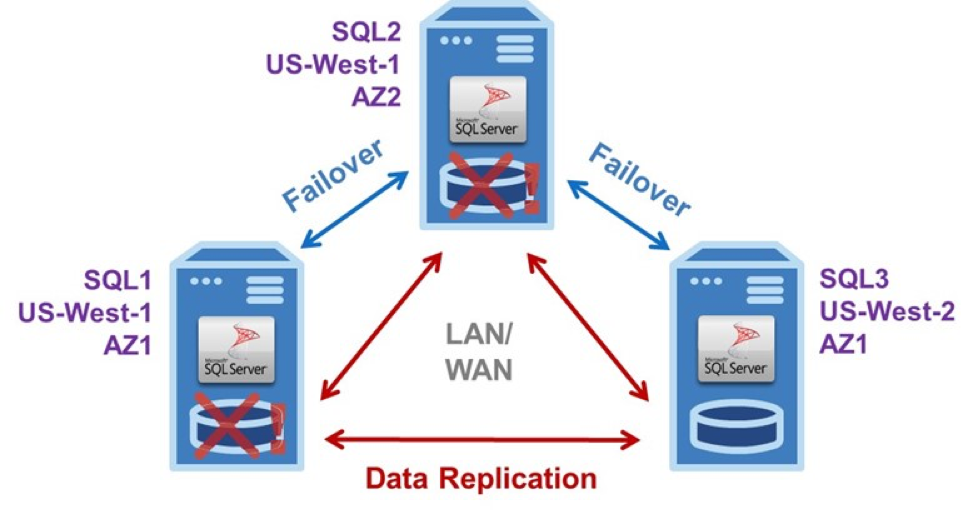

Typical Three-Node SANless Failover Cluster

The example EC2 configuration in the diagram shows a typical three-node SANless failover cluster configured as Virtual Private Cloud (VPC) with all three SQL Server instances in different Availability Zones. To eliminate the potential for an outage in a local disaster affecting an entire region, one of the AZs is located in a different AWS region.

This three-node SANless failover cluster, with one active and two standby server instances, can handle two concurrent failures with minimal downtime and no data loss.

A three-node SANless failover cluster affords carrier-class HA and DR protections. The basic operation is the same in the LAN and/or WAN for Windows or Linux. Server #1 is initially the primary or active instance that replicates data continuously to both servers #2 and #3. It experiences a problem. Then it triggers an automatic failover to server #2, which now becomes the primary replicating data to server #3.

Failure Detected

If the failure was caused by an infrastructure outage, the AWS staff would begin immediately diagnosing and repairing whatever caused the problem. Once fixed, it could be restored as the primary, or server #2 could continue in that capacity replicating data to servers #1 and #3. Should server #2 fail before server #1 is returned to operation, as shown, server #3 would become the primary after a manual failover. Of course, if the failure was caused by the application software or certain other aspects of the configuration, it would be up to the customer to find and fix the problem.

SANless failover clusters can be configured with only a single standby instance, of course. But such a minimal configuration does require a third node to serve as a witness. The witness is needed to achieve a quorum for determining the assignment of the primary. This important task is normally performed by a domain controller in a separate AZ. Keeping all three nodes (primary, secondary and witness) in different AZs eliminates the possibility of losing more than one vote if any zone goes offline.

It is also possible to have two- and three-node SANless failover clusters in hybrid cloud configurations for HA and/or DR purposes. One such three-node configuration is a two-node HA cluster located in an enterprise data center with asynchronous data replication to AWS or another cloud service for DR protection—or vice versa.

In clusters within a single region, where data replication is synchronous, failovers are normally configured to occur automatically. For clusters with nodes that span multiple regions, where data replication is asynchronous, failovers are normally controlled manually to avoid the potential for data loss. Three-node clusters, regardless of the regions used, can also facilitate planned hardware and software maintenance for all three servers while providing continuous DR protection for the application and its data.

Maximise High Availability for SQL Server

By offering 55 availability Zones spread across 18 geographical Regions, the AWS Global Infrastructure affords enormous opportunity to maximize High Availability for SQL Server by configuring SANless failover clusters with multiple, geographically-dispersed redundancies. This global footprint also enables all SQL Server applications and data to be located near end-users to deliver satisfactory performance.

With a purpose-built solution, carrier-class high availability need not mean paying a carrier-like high cost. Because purpose-built failover clustering software makes effective and efficient use of EC2’s compute, storage and network resources, while being easy to implement and operate, these solutions minimize any capital and all operational expenditures, resulting in high availability being more robust and more affordable than ever before.

Reproduced from TheNewStack