How to Fix Inherited Application Availability Problems

What to do when you inherit a mess

I grew up in a large immediate family, and an even larger group of well-meaning aunts, uncles, and family friends. Anyone who has ever been a part of a large family has probably, on more than one occasion, received a hand-me-down or had well-intentioned relatives give you a freebie. And if so, you know that beneath the surface of that cool-sounding inheritance, the rumored stylish clothes, or the old “family car” a nightmare could be lurking. Suddenly, your sudden fortune on four wheels feels like a curse that is two-thirds money pit and one third eye-sore.

So what do you do when you inherit a mess of Application Availability Problems? Well some DIYers bring in the dumpsters and start fresh. But this isn’t HGTV and we aren’t talking about inherited furniture but an inherited application availability problem. You usually know you have a mess on your hands the first time you try to do a cluster switchover for simple, planned maintenance and your application goes offline. Now, what do you do when you have inherited a high availability mess.

Two Practical Tips For When You Inherit A High Availability Mess (I mean responsibility)

I. Research

Perhaps one of the best things you can do before taking action is to gather as much data as quickly as possible. Of course, the state of your inheritance might indicate the speed at which you’ll need to gather your data. Some key things to consider during your research of to solve your Application Availability Problems:

- Previous owner. Research the previous owner of the configuration including their chain of command, reach of authority, background, team dynamics and if possible, charter. Find out what were the original organizational structures.

- Research what was done in the past to achieve high or higher availability, and what was left out. In some environments, the focus for high availability falls squarely on a portion of the infrastructure while neglecting the larger workflow. Dig into any available requirements. As well as what changes have been implemented or added since the requirements were originally instated. If you’re in the midst of a cloud migration, understand the goals of moving this environment to the cloud.

- Owners and requirements provide a lot of history. However, you’ll also want to research why key decision makers made the choices and tradeoffs on designs and solutions, as well as software and hardware architecture requirements. Evaluate whether these choices were either successful or unsuccessful. Your research should focus on original problems and proposed solutions.

- You may also want to consider why the environment you inherited feels like a mess. For example, is it due to lack of documentation, training, poor or missing design details, the absence of a run book, or other specification details.

- Research what, if any, enterprise grade high availability software solutions have been used to complement the architecture of virtual machines, networks, and applications. Is there a current incumbent? If not, what were the previous methods for availability?

II. Act

Once you’ve gathered this research, your next step is to act: update, improve, implement, or replace. Don’t make the mistake of crossing your fingers and hoping you never need a cluster failover.

-

Upgrade

In some cases, your research will lead to a better understanding of the incumbent solution and a path to upgrade that solution to the latest version. Honestly, we have been there with our own customers. Transitions are mishandled. A solution that works flawlessly for years becomes outdated.

-

Improve

Consider alternatives if an upgrade is not warranted. If the data points to other areas of improvement such as software or hardware tuning, migration to cloud or hybrid, network tuning, or some other identified risk or single point of failure. Perhaps your environment is due for a health check or the increases in your workload warrants an improvement in your instance sizes, disk types, or other parameters.

-

Implement

In other cases, your research will uncover some startling details regarding the lack of a higher availability strategy or solution. In which case, you will use your research as a catalyst to design and implement a high availability solution. This solution might necessitate private cloud, public cloud, or hybrid cloud architectures coupled with the enterprise grade HA software to enable successful monitoring and recovery.

-

Replace

In extreme cases, your research will lead you to a full replacement of the current environment. Sometimes this is required when a customer or partner migrated to the cloud. But their high availability software offering was not cloud ready. While many applications boast of being cloud ready, in some cases this is more slideware than reality. Your on-premise solution is not cloud ready? Then your only recourse may be to go with a solution that is capable of making the cloud journey with you, such as the SIOS Protection Suite products.

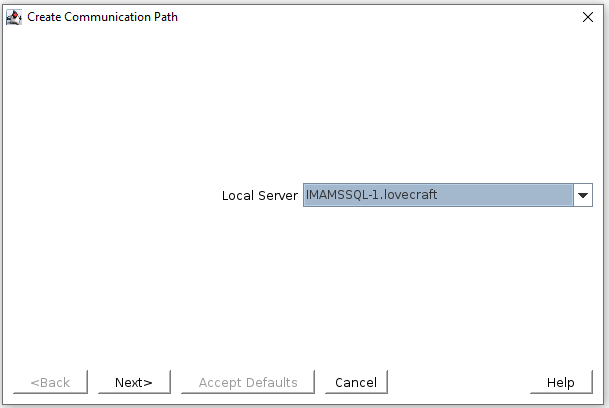

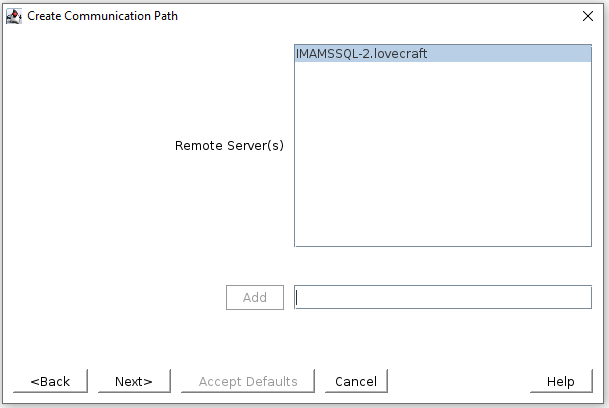

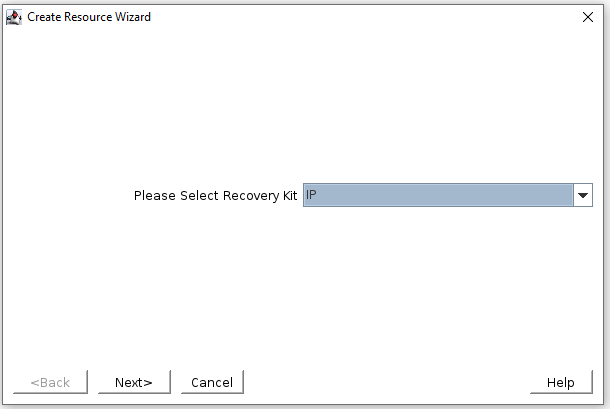

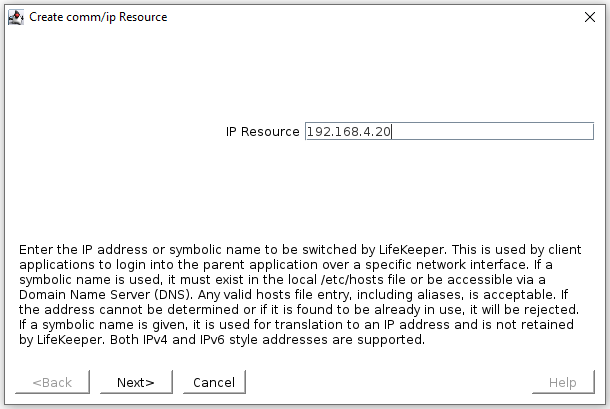

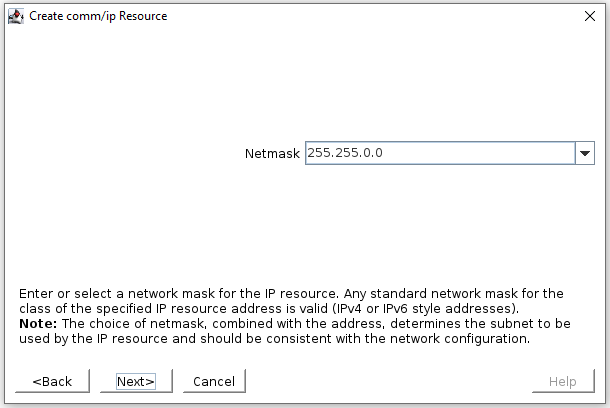

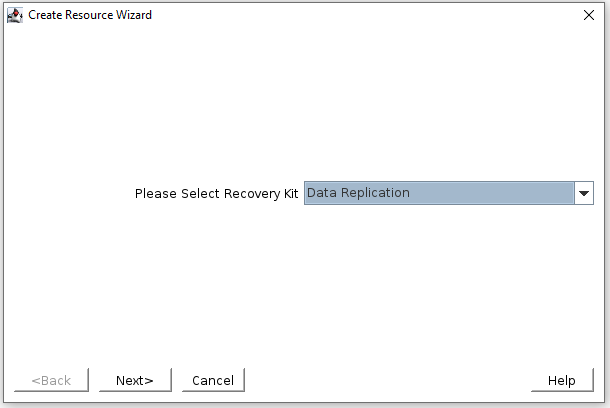

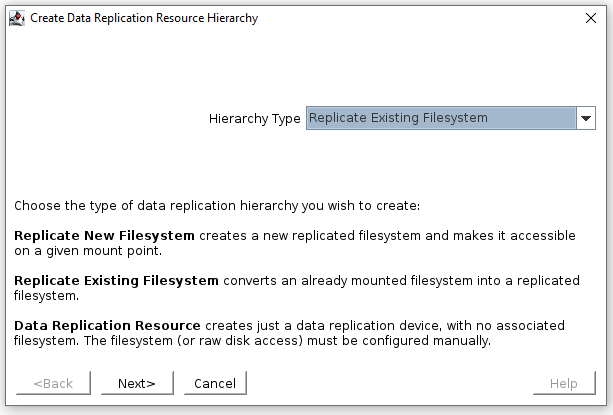

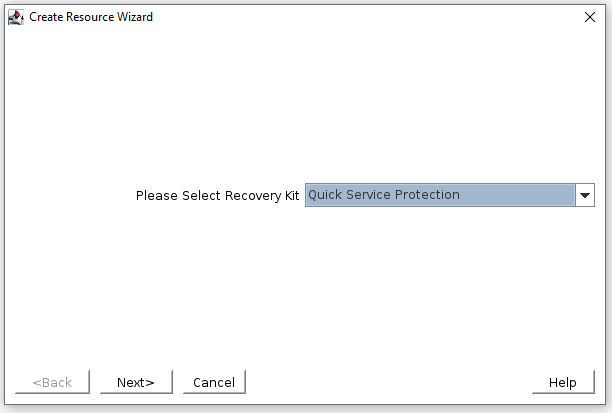

As VP of Customer Experience for SIOS Technology I experienced a situation that shows the importance of these steps – when our Services team was engaged by an enterprise partner to deploy SIOS Protection Suite products. As we worked jointly with the customer, doing research, we uncovered a wealth of history. The customer professed to have a limited number of downtime or availability issues. But our research revealed an unsustainable and highly complex hierarchy of alerts, manually executed scripts, global teams, and hodgepodge of tools kludged together. We were able to successfully architect and replace their homemade solution with a much more elegant and automated solution with this information. Best part, it was wizard based, including automated monitoring, recovery, and system failover protection. No more kludge. No more trial-and-error DIY. Just simple, reliable application failover and failback for HA/DR protection.

If you have inherited a host of Application Availability Problems, contact the deployment and availability experts at SIOS Technology Corp. Our team can walk you through the research process, help you hone your requirements. Finally, upgrade, improve, replace or implement the solution to provide your enterprise with higher availability.

– Cassius Rhue, Vice President, Customer Experience

Reproduced from SIOS