What’s new in SIOS LifeKeeper for Linux v 9.6.2?

SIOS LifeKeeper for Linux version 9.6.2 is now available! This new version supports v 8.6 of leading Linux distributions, supports Azure Shared Disk, and provides added protection from split brain scenarios that can occur when the network connection between cluster nodes fails.

New in SIOS LifeKeeper Linux, Version 9.6.2

SIOS LifeKeeper Linux, version 9.6.2 takes advantage of the latest bug fixes, security updates, and application support critical to their infrastructures and adds support for Miracle Linux v 8.4 for the first time as well as support for the following operating system versions:

- Red Hat Enterprise Linux (RHEL)8.6

- Oracle Linux 8.6

- Rocky Linux 8.6

New Support for Azure Shared Disk

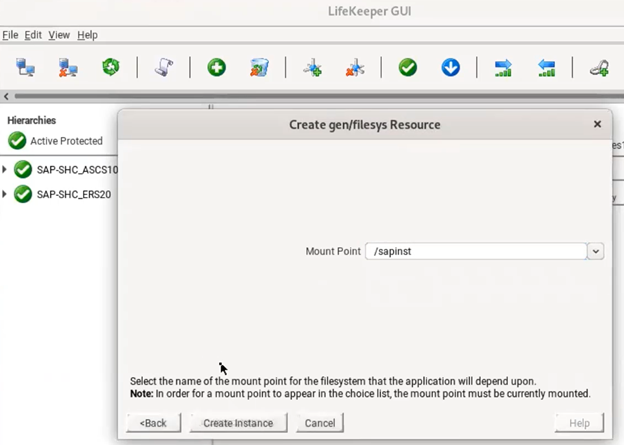

LifeKeeper for Linux v 9.6.2 is now certified for use with Azure shared disk, enabling customers to build a Linux HA cluster in Azure that leverages the new Azure shared disk resource.

Standby Node Write Protection

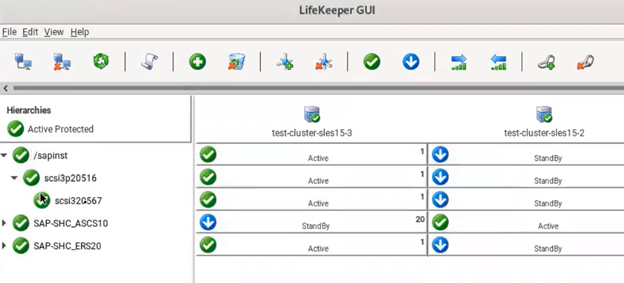

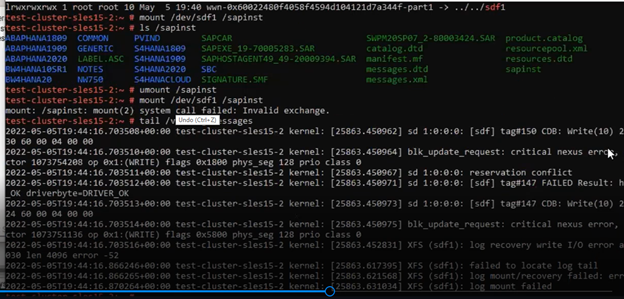

LifeKeeper can now use the new Standby Node Health Check feature to lock the standby node against attempted writes to a protected shared storage device, protecting against data corruption that can result from loss of network connection between cluster nodes.

LifeKeeper Load Balancer Health Check Application Recovery Kit (ARK)

SIOS LifeKeeper for Linux comes with Application Recovery Kits (ARKs) that add application-specific intelligence, enabling automation of cluster configuration and orchestration of failover in compliance with application best practices. The latest version of SIOS LifeKeeper includes a new ARK that makes it easier for the user to install, find and use Load Balancer functionality in AWS EC2.

Contact SIOS here for purchasing information.

Reproduced with permission from SIOS