| June 7, 2022 |

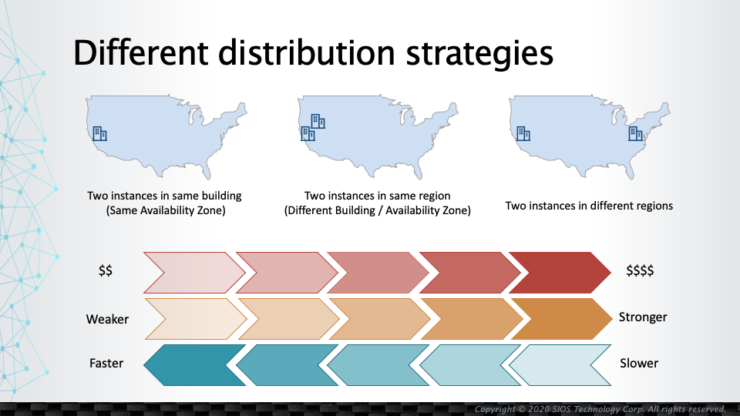

How Workloads Should be Distributed when Migrating to a Cloud Environment |

| June 3, 2022 |

Benefits of SIOS Protection Suite/LifeKeeper for Linux |

| May 29, 2022 |

SIOS Protection Suite/LifeKeeper for Linux – Integrated Components |

| May 25, 2022 |

High Availability, RTO, and RPO |

| May 21, 2022 |

SIOS Protection Suite for Linux Evaluation Guide for AWS Cloud Environments |