Major Cloud Outage Impacts Google Compute Engine – Were You Prepared?

Google first reported an “Issue” on Jun 2, 2019 at 12:25 PDT. As is now common in any type of disaster, reports of this outage first appeared on social media. Social media seems to the most reliable place to get any type of information early in a disaster now.

Many services that rely on Google Compute Engine were impacted. I’ve three teenage kids at home. Something was up when all three kids emerged from their caves, aka, bedrooms, at the same time with a worried look on their faces. Snapchat, Youtube and Discord were all offline!

They must have thought that surely this was the first sign of the apocalypse. I reassured them this was not the beginning of the new dark ages. And instead they should go outside and do some yard work. That scared them back to reality and they quickly scurried away to find something else to occupy their time.

All kidding aside, there were many services being reported as down, or only available in certain areas. The dust is still settling on the cause, breadth and scope of the outage. But it certainly seems that the outage was pretty significant in size and scope, impacting many customers and services including Gmail and other G-Suite services, Vimeo and more.

While we wait for the official root cause analysis on this latest Google Compute Engine outage, Google reported “high levels of network congestion in the eastern USA” caused the downtime. We will have to wait to see what they determine caused the network issues. Was it human error, cyber-attack, hardware failure, or something else?

Were You Prepared For This Cloud Outage?

I wrote during the last major cloud outage. If you are running business critical workloads in the cloud, regardless of the cloud service provider, it is incumbent upon you to plan for the inevitable outage. The multi-day Azure outage of Sept 4th, 2018 was related to a failure of the secondary HVAC system to kick in during a power surge related to an electrical storm. While the failure was just within a single datacenter, the outage exposed multiple services that had dependencies on this single datacenter. This made the datacenter itself a single point of failure.

Have A Sound Disaster Recovery Plan

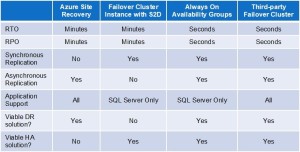

Leveraging the cloud’s infrastructure, minimize risks by continuously replicating critical data between Availability Zones, Regions or even cloud service providers. In addition to data protection, having a procedure in place to rapidly recover business critical applications is an essential part of any disaster recovery plan. There are various replication and recovery options available. This includes services provided by the cloud vendor themselves like Azure Site Recovery, to application specific solutions like SQL Server Always On Availability Groups, to third party solutions like SIOS DataKeeper that protect a wide range of applications running on both Windows and Linux.

Having a disaster recovery strategy that is wholly dependent on a single cloud provider leaves you susceptible to a scenario that might impact multiple regions within a single cloud. Multi-datacenter or multi-region disasters are not likely. However, as we saw with this recent outage and the Azure outage last fall, even if a failure is local to a single datacenter, the impact can be wide reaching across multiple datacenters or even regions within a cloud. To minimize your risks, consider a multi-cloud or hybrid cloud scenario where the disaster recovery site resides outside of your primary cloud platform.

The cloud is just as susceptible to outages as your own datacenter. You must take steps to prepare for disasters. I suggest you start by looking at your most business critical apps first. What would you do if they were offline and the cloud portal to manage them was not even available? Could you recover? Would you meet your RTO and RPO objectives? If not, maybe it is time to re-evaluate your Disaster Recovery strategy.

“By failing to prepare, you are preparing to fail.”

― Benjamin Franklin