Clustering a Non-Cluster-Aware Application with SIOS LifeKeeper

Not every application was built with clustering in mind. In fact, most were not. But that does not mean they cannot benefit from the high availability protection provided by SIOS LifeKeeper. If your application can be stopped, started, and run on another server, there is a good chance you can cluster it.

Before jumping in, there are a few key considerations that will make the difference between a successful clustering implementation and a frustrating trial-and-error experience.

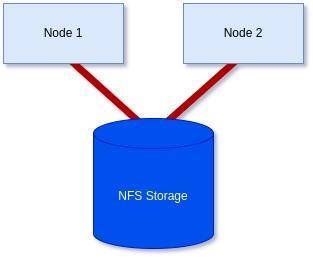

1. Move Dynamic Data to Shared or Replicated Storage

Applications typically store dynamic data such as logs, databases, cache, and other application data on local storage. When clustering, that will not work. During failover, the standby node must have access to the same data so the application can pick up exactly where it left off.

The solution is to relocate all dynamic data to a shared disk in a SAN environment or to a replicated volume when using SIOS DataKeeper. Static files such as executables can remain local, but anything that changes at runtime should reside on storage that is accessible from all cluster nodes.

2. Update Application Host References for Clustered Environments

Many applications refer to the local system by name, FQDN, or IP address. That is fine in a standalone configuration, but in a cluster the application needs to bind to or communicate through the cluster’s Virtual IP (VIP).

If the application or its configuration files reference:

- localhost

- the node’s hostname or FQDN

- the node’s static IP address

You will likely need to change those references to the VIP or a hostname that resolves to the VIP. Typical locations to check include registry keys, configuration files, and any connection strings the application uses to reach itself or other services.

3. Write Custom Start, Stop, and Monitor Scripts

Cluster-aware applications include logic that tells the cluster how to start, stop, and monitor the service. Non-cluster-aware applications do not. That is where SIOS LifeKeeper Application Recovery Kits (ARKs) come in.

If one does not exist for your application, you can create custom scripts that:

- Start the service or process

- Stop it cleanly before switchover

- Monitor its health, for example by checking a port, log file, or process

In some cases, protecting an application is as simple as starting and stopping a service. For those situations, LifeKeeper provides the Quick Service Protection (QSP) Recovery Kit. With QSP, you can simply select the service you want to protect, eliminating the need to write any code. LifeKeeper will automatically handle start, stop, and monitoring operations for that service.

These options make it easy to protect a wide range of applications, from simple Windows or Linux services to complex multi-component systems, all within the same clustering framework.

4. Handle Encryption Keys Properly Across All Cluster Nodes

If your application encrypts data at rest, each cluster node must be able to decrypt it. This means the encryption key must be accessible and consistent across all nodes. Depending on your setup, that might involve synchronizing a local key store or using a centralized key management solution.

The key takeaway is that every node must be able to access the encryption key securely and consistently when it becomes active. Otherwise, the application may start but fail to access its data after failover.

5. Consider How Clients Reconnect After a Failover

When an application fails over from one node to another, there is a brief interruption while the new active node takes over the IP address and starts the application. For clients connected to that service, behavior depends entirely on how they handle connection loss.

If client retry logic is built in, users might never notice an interruption. The client will automatically reconnect once the VIP and service are available again.

If the client does not include retry logic, users may need to manually refresh or restart the connection after a failover.

It is important to understand how your client behaves and test how it responds during failover. Sometimes adding a simple connection retry loop or adjusting a connection timeout setting is all that is needed for a seamless user experience.

6. Verify Application Licensing Requirements for Cluster Deployments

One often overlooked step is licensing. When you cluster an application, it is installed on every node in the cluster, but only one instance, the active one, runs at a time. Some vendors provide special active/passive cluster licenses, while others require a license for every installed instance.

Always check with your application vendor before deployment. A quick conversation up front can save hours of licensing issues later.

7. Test All Application and Cluster Components Thoroughly

Testing is one of the most important and most frequently overlooked parts of any clustering project.

Do not only test failover. Test every function of the application while it is protected. This includes:

- Startup and shutdown sequences

- All required services and background tasks

- Any component that reads, writes, or caches data

- Any process that relies on service dependencies

- Client behavior before, during, and after failover

If the application uses a custom script or QSP, make sure each step works correctly under load. This not only catches issues early but also gives confidence that the solution will behave correctly during real incidents.

Achieving HA for Non-Cluster-Aware Applications

Clustering a non-cluster-aware application with SIOS LifeKeeper is not difficult, but it does require some planning. Move your data to shared or replicated storage, point everything to the cluster’s VIP, script the start, stop, and monitor logic (or use QSP when appropriate), make sure encryption keys are available on all nodes, and confirm licensing requirements.

Do not forget to test how your clients respond to failovers, because true high availability means both your servers and your users stay connected.

Follow these steps and you will find that even the most “standalone” application can achieve enterprise-grade high availability. Request a demo today to see how SIOS LifeKeeper brings reliable HA to non-cluster-aware applications.

Author: David Bermingham Senior Technical Evangelist at SIOS

Reproduced with permission from SIOS