How to recreate the file system and mirror resources to ensure the size information is correct

When working with high availability (HA) clustering, it’s essential to ensure that the configuration of all nodes in the cluster are parallel with one another. These ‘mirrored’ configurations help to minimize the failure points on the cluster, providing a higher standard of HA protection. For example, we have seen situations in which the mirror-size was updated on the source node but the same information was not updated on the target node. The mirror size mismatch prevented LifeKeeper from starting on the target node in a failover. Below are the recommended steps for recreating the mirror resource on the target node with the same size information as the source:

Steps:

- Verify – from the application’s perspective – that the data on the source node is valid and consistent

- Backup the file system on the source (which is the source of the mirror)

- Run /opt/LifeKeeper/bin/lkbackup -c to backup the LifeKeeper config on both nodes

- Take all resources out of service. In our example the resources are in service on node sc05 and sc05 is the source of the mirror (and sc06 is the target system/target of the mirror).

- In the right pane of the LifeKeeper GUI, right-click on the DataKeeper resource that is in service.

- Click Out of Service from the resource popup menu.

- A dialog box will confirm that the selected resource is to be taken out of service. Any resource dependencies associated with the action are noted in the dialog. Click Next.

- An information box appears showing the results of the resource being taken out of service. Click Done.

- Verify that all resources are out of service and file systems are unmounted

- Use the command cat /proc/mdstat on the source to verify that no mirror is configured

- Use the mount command on the source to make sure the file system is no longer mounted

- Use /opt/LifeKeeper/bin/lcdstatus -q on the source to make sure the resources are all OSU.

- In the LifeKeeper GUI break the dependency between the IP resource (VIP) and the file system resource (/mnt/sps). Right click on the VIP resource and select Delete Dependency.

Then, select the File System resource (/mnt/sps) for the Child Resource Tag.

This will result in two hierarchies, one with the IP resource (VIP) and one with the file system resource (/mnt/fs) and the mirror resource (datarep-sps).![]()

- Delete the hierarchy with the file system and mirror resources. Right click on /mnt/sps and select Delete Resource Hierarchy.

- On the source, perform ‘mount <device> <directory>’ on the file system.

Example: mount /dev/sdb1 /mnt/sps

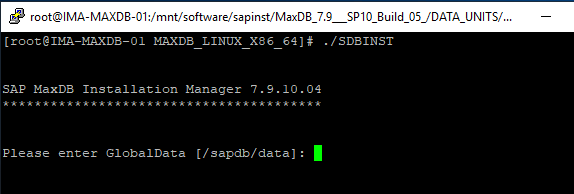

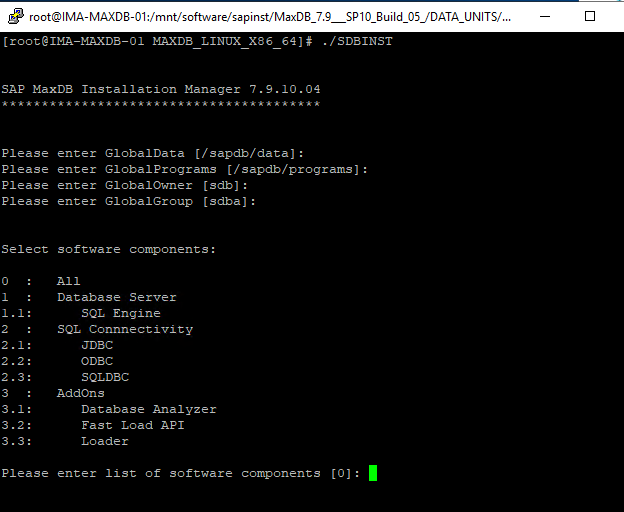

- Via the GUI recreate the mirror and file systems via the following:

- Recovery Kit: Data Replication

- Switchback Type: Intelligent

- Server: The source node

- Hierarchy Type: Replicate Existing Filesystem

- Existing Mount Point: <select your mount point>. It is /mnt/sps for this example.

- Data Replication Resource Tag: <Take the default>

- File System Resource Tag: <Take the default>

- Bitmap File: <Take the default>

- Enable Asynchronous Replication: Yes

- Once created, you can Extend the mirror and file system hierarchy:

- Target server: Target node

- Switchback Type: Intelligent

- Template Priority: 1

- Target Priority: 10

- Once the pre-extend checks complete select next followed by these values:

- Target disk: <Select the target disk for the mirror>. It is /dev/sdb1 in our example.

- Data Replication Resource Tag: <Take the default>

- Bitmap File: <Take the default>

- Replication Path: <Select the replication path in your environment>

- Mount Point: <Select the mount point in your environment>. It is /mnt/sps in our example.

- Root Tag: <Take the default>

When the resource “extend” is done select “Finish” and then “Done”.

- In the LifeKeeper GUI recreate the dependency between the IP resource (VIP) and the file system resource (/mnt/sps). Right click on the VIP resource and select Create Dependency. Select /mnt/sps for the Child Resource Tag.

- At this point the mirror should be performing a full resync of the size of the file system. In the LifeKeeper GUI in the right pane of the LifeKeeper GUI, right-click on the VIP resource. Select “In Service” to restore the IP resource (VIP), select the source system where the mirror is in service (sc05 in our example) and verify that the application restarts and the IP is accessible.

Reproduced with permission from SIOS