Toyo Gosei Ltd. Migrates SAP Enterprise System to Azure: To Build a “System That Never Stops” With Replication

“”We got several proposals for both on-premises and cloud, and decided to migrate to Microsoft Azure with Fujitsu’s proposal of SIOS DataKeeper – that best fits our requirements,” said Akihiko Kobayashi, a System Representative.

“”We got several proposals for both on-premises and cloud, and decided to migrate to Microsoft Azure with Fujitsu’s proposal of SIOS DataKeeper – that best fits our requirements,” said Akihiko Kobayashi, a System Representative.

Toyo Gosei is a long-established chemical manufacturer that has been operating business for 65 years. The company’s main product, photosensitive materials for photoresist, is an indispensable material for manufacturing liquid crystal displays and semiconductor integrated circuits. The company is also focusing on technological development for the most advanced photosensitive materials.

In 2007, the company was required to select the successor system of GLOVIA/Process C1, which had been used as a core business system. While receiving proposals from several companies, they chose to introduce “SAP,” an ERP system of German company SAP because of its solid J-SOX support. Time passed and around 2015, the servers installed when introducing SAP had come to the end of maintenance.

IT Infrastructure

The company’s IT infrastructure is an on-premises VMware-based data center and a remote data center for business continuity/disaster protection. Since most of their applications run on the Microsoft Windows operating system, they used guest-level Windows Server failover clustering in their VMware environment to provide high availability and disaster protection.

The Challenge – Migrating to Azure

Behind the migration to cloud, there were needs to be free from on-premises system maintenance, demands for flexibility of scale-up and preparing for disaster based on the experience during the Great East Japan Earthquake.

Their decision to go to cloud was driven by the fact that the servers in their premises physically moved during the earthquake and it almost led to a failure.

When migrating to Azure, the company built a backup system to address system failures and in case of disasters. “SAP has all the data necessary for our business. If SAP stops, the production process also stops. If the outage continues for two or three days, shipment, payment and billing is also stopped. The SAP system cannot be stopped,” said Kobayashi.

The first step was to set up a SAP backup system on Azure to take a daily backup of the production system in the East Japan region of Azure and a weekly backup of the standby system in the West Japan region.

Implementation

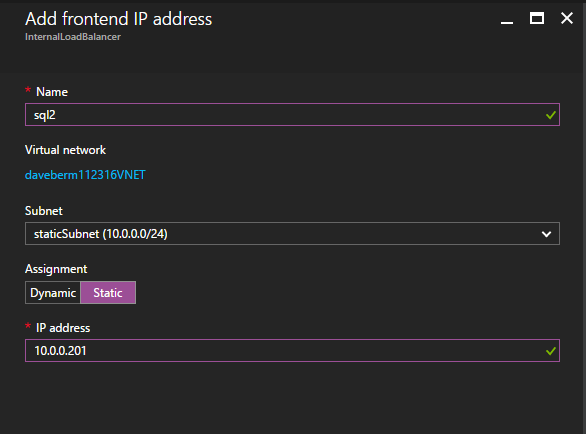

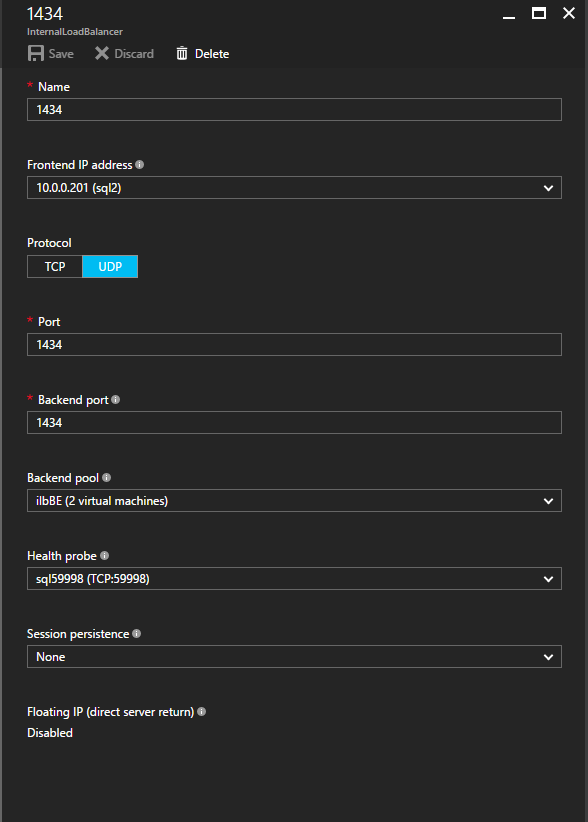

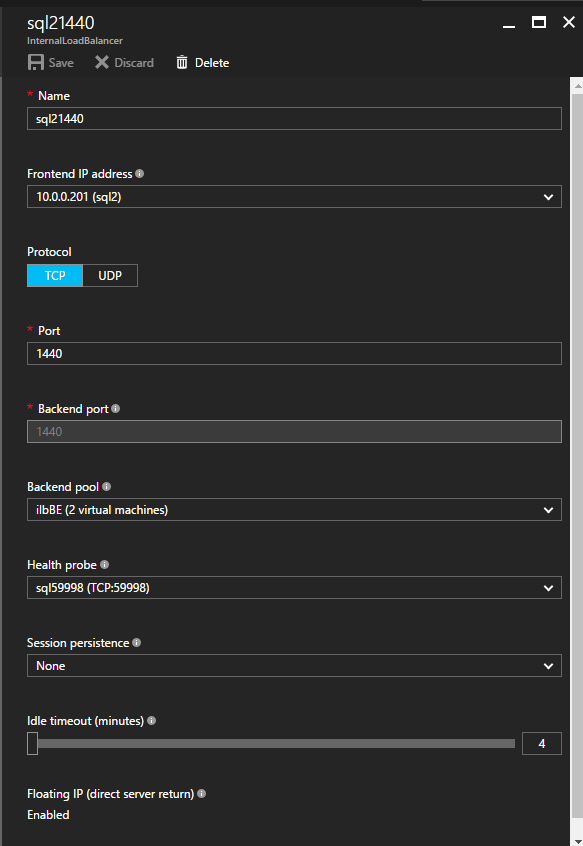

“However, backup is just a backup. Basically we need to make production system redundant in order to prevent it from stopping. On AWS, which was used for information systems, shared disks were available with a redundant configuration. However, Azure does not support shared disks. For this reason, we decided to use DataKeeper of SIOS Technology that enables data replication on Azure,” said Kobayashi.

They created a cluster configuration between storage systems connected to the redundant SAP production system and replicate the data using DataKeeper to make it consistent. This provides the same availability as when using shared disks even on Azure where shared disk configuration is not supported.

“We have been in stable operations after the initial stage where a failover occurred,” said Kobayashi. “Regarding SIOS DataKeeper, the only thing we have to do is renew the maintenance contract.”

The Results

As a mid-term plan in the future, they need to prepare for the “SAP 2025 problem” where support for the current SAP version will expire. They have not built a specific plan, but Kobayashi said, “when moving to the new architecture S/4HANA and if clustering is required, we will implement SIOS DataKeeper because we trust it.“

SIOS DataKeeper is a reliable partner for Kobayashi. “Because you cannot stop the production system, it is IT personnel’s responsibility to choose a reliable tool,” he said.

Get a Free Trial of SIOS DataKeeper